SPIRIT (Masters thesis)¶

2015 – 2017, Kyoto University

Summary

- Masters thesis project at Kyoto University, developing a third-person view interface for controlling a monocular drone.

- Created a system that superimposed a CGI version of the drone on top of an actual image taken by the FPV camera earlier.

- Designed, conducted, and analyzed user studies which were performed to test the efficacy of the system, which showed a large improvement in many metrics, even with a 2 Hz transmission rate.

SPIRIT stands for "Subimposed Past Image Records Implemented for Teleoperation".

Japan is prone to natural disasters. Inspection of collapsed structures paves the way for a safer search and rescue operation in the aftermath. Using drones is tight spaces is difficult, and the boundaries of the drone are not visible in the first-person view (FPV) feed, especially with a monocular camera. In addition, signal quality may be degraded due to structural materials, leading to a bad or spotty connection.

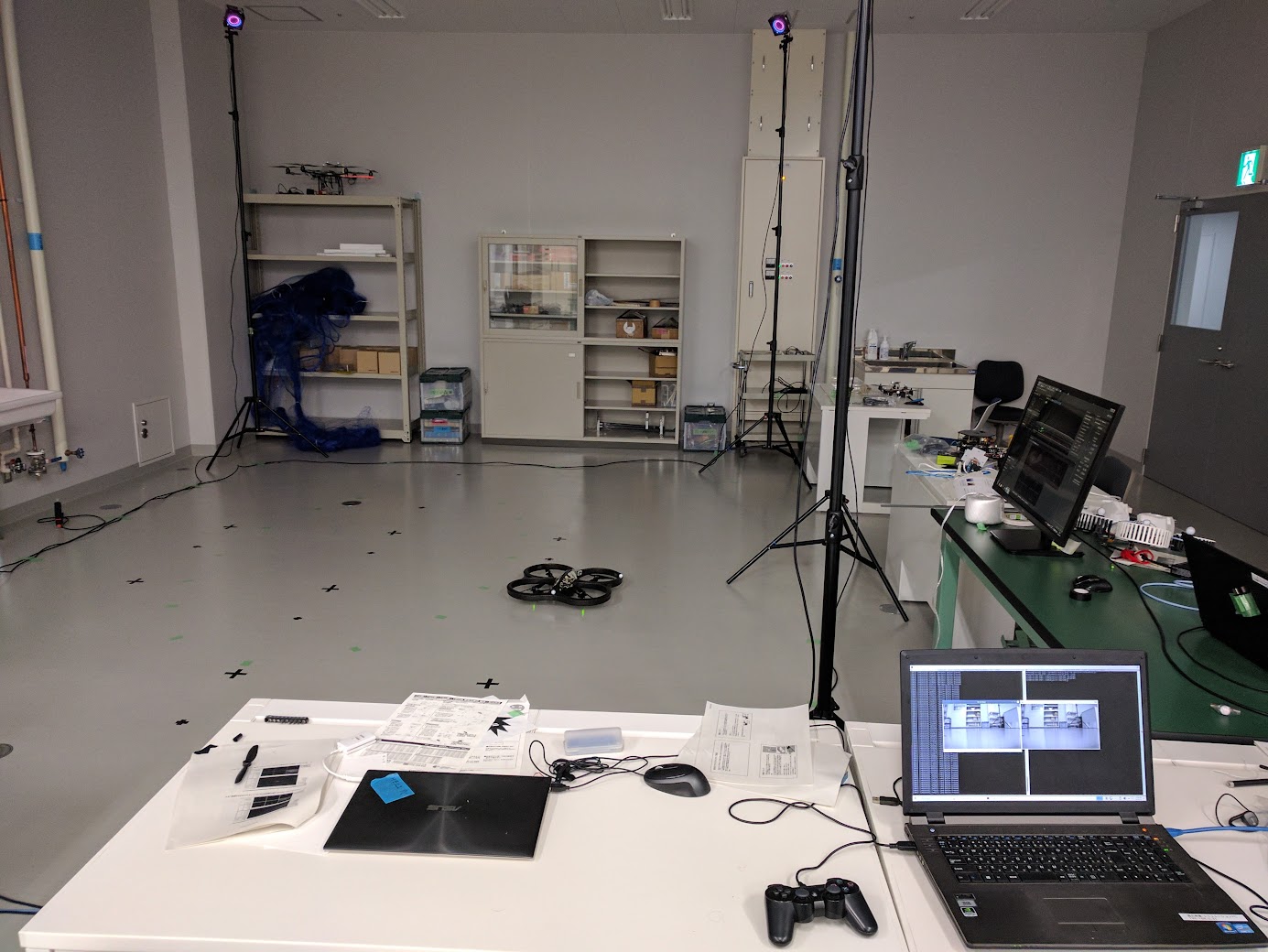

Building upon previous work of the Mechatronics lab on the use of Past Image Records for teleoperation, mobile manipulators, and narrow communication bands, I created a third-person view interface for controlling an AR.Drone, primarily using Python and ROS. A CGI version of the drone was superimposed on top of an actual image taken by the FPV camera earlier, which contains the current position of the drone.

The position of the drone was known thanks to motion capture cameras, but could also be derived from other metrics such as e.g. visual odometry. It used a single camera as its source, and simulated a degraded transmission environment by dropping frames. I designed, conducted, and analyzed user studies which were performed to test the efficacy of the system. It showed a large improvement in many metrics, even with a 2 Hz transmission rate.

The biggest challenge was deciding which images to use. I created multiple evaluation functions, and modularized the code to be able to select the function from the launch files. I also automated the generation of new launch files using xacro programming and Python.

Source code

The source code is available on GitHub. It is well-documented, including a wiki, checklists, and instructions. It contains a ROS core; data collection, analysis, and visualization code; and the full LaTeX source code for the thesis.

A summary of the individual components is here, and the thesis itself can be downloaded here.